We just do it. The Itility way. With teams of Itilians at our customers, we build digital solutions. We go above and beyond to bring value to our customers. With a passion for the trade of data, cloud, cybersecurity, software, and consultancy. Because passion is what fuels us in our work, we reach beyond roles and beyond job descriptions.

So, if you fit our Itility DNA and culture, then let’s explore your passion! We offer cool job vacancies in an environment where you can accelerate to become a best-in-class consultant or engineer.

Our culture and DNA

Push yourself to go one step further. We love our culture and our core-values: PILSI. A Dutch acronym for Passie (Passion), Inhoud (Content), Leveren (Deliver), Samen (Together), and Innovatie (Innovation).

PILSI is what we believe is most important in our work: everything starts with passion for what we do. It is powered by a hunger for knowledge and content and is driven to deliver beyond expectations. Always together, always as a team. And always innovating, becoming better every day, going one step beyond.

Successful companies choose Itility

Itility Academy

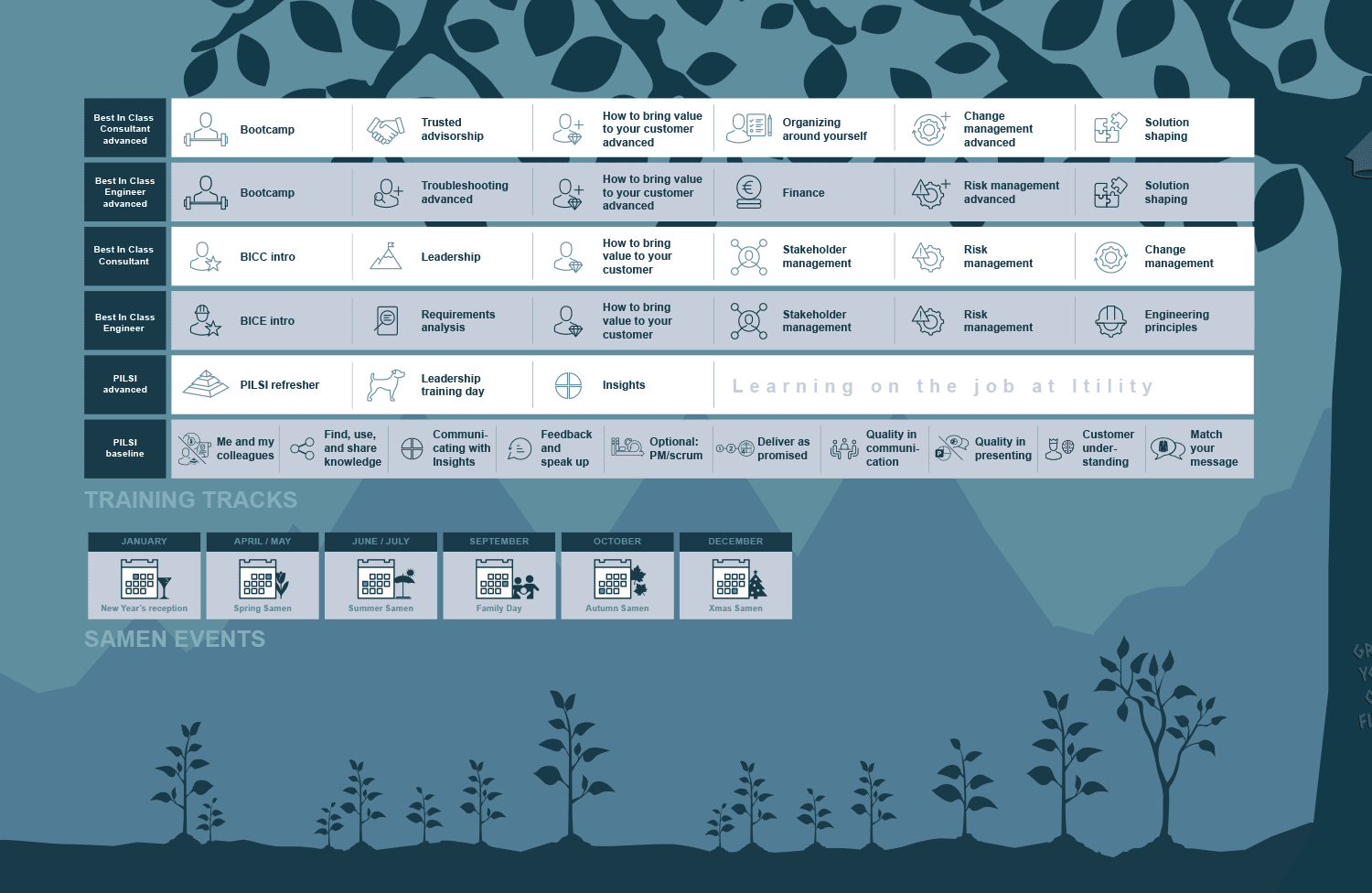

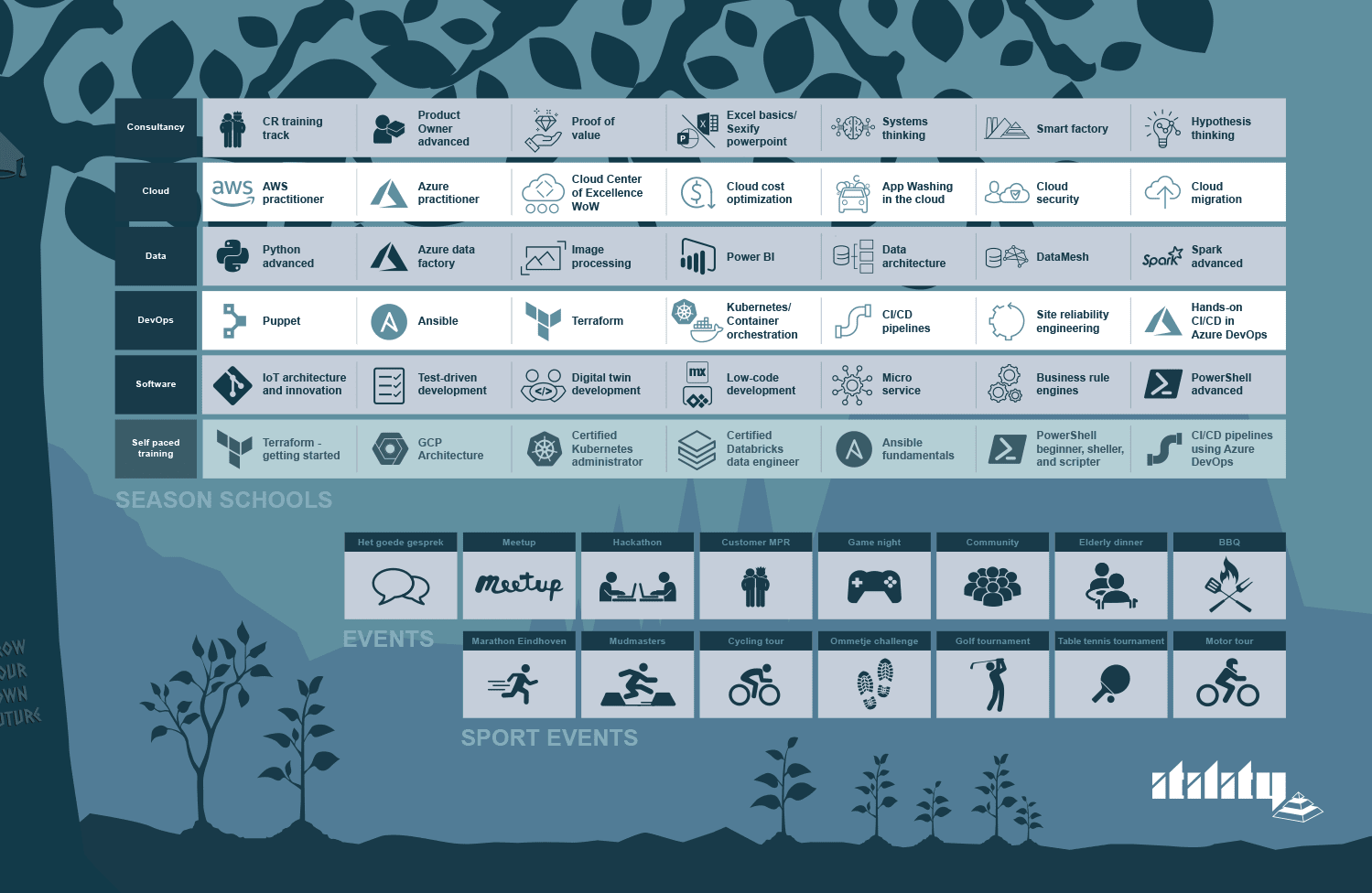

Your development is key. With our Itility Academy you can develop yourself on content, tools, and on technical and soft skills. Each month, a large variety of training sessions are scheduled to develop yourself as a consultant and engineer.

Exclusive programs

Are you a young talent, who has (almost) graduated from university or has a maximum of one year of working experience? Or do you want to develop your career as an advanced professional? Then join one of our exclusive programs!

Young Professional Program

The Young Professional Program (YPP) is developed to help you kick-start your career within months. We train you, guide you, and coach you, both on a personal-skills and technical-skills level, based on your area of passion (cybersecurity, data science, software, or cloud).

Advanced Professional Program

The Advanced Professional Program (APP) is a training program that is fully tailored to your ambitions. A unique opportunity to get training, practice, real-life experience, and numerous certifications, in order to become a best-in-class consultant or a best-in-class engineer. You determine the content of the program, so your ambition defines your path.